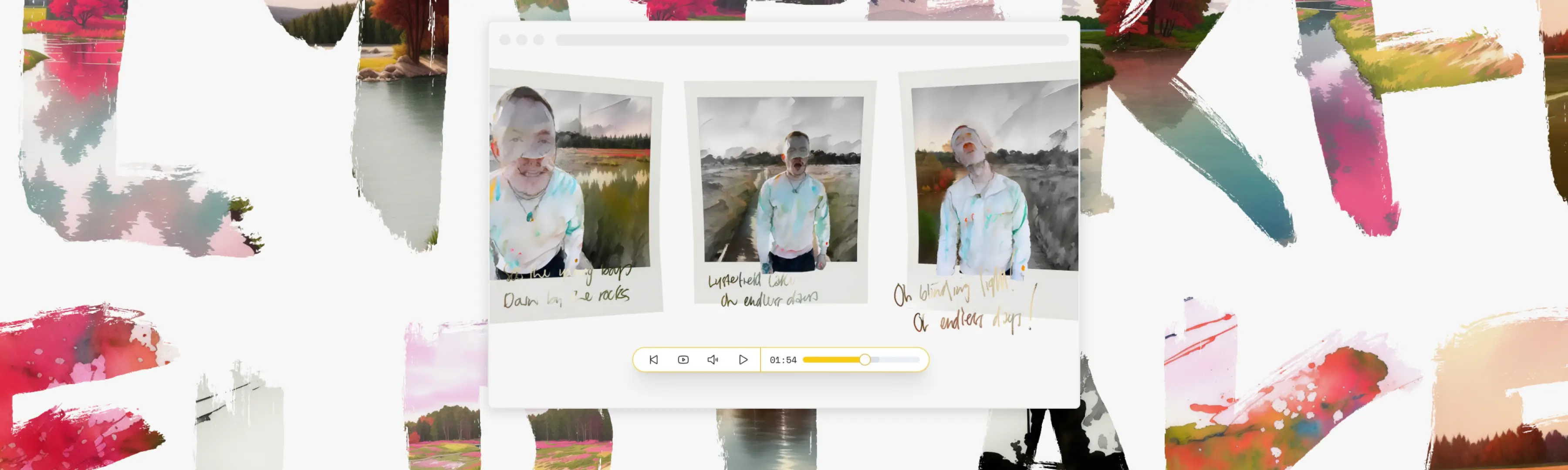

Lysterfield Lake is a song about a place outside Melbourne, Australia. It's about the endless summers of your youth, and the tiny changes in you that you don't even notice adding up. It's also about memories, which, like polaroids, fade and change over time.

I don't remember exactly when I wrote it in much the same way I don't remember exactly when I started this project, which has spanned the better part of a year and has all but consumed the most creative bits of my brain.

I think it all began when I came across Replicate (and by extention, their open-source container for running machine learning models, Cog.) I was so inspired by their Explore page, and, if I'm being totally honest, I was worried about the cost of experimenting with AI. I'd set up a gaming PC in the weird limbo of the 2020 lockdowns, and these tools made it effortless (and for the most part, free) to try, and test, and many, many times, fail.

Project Metadata

- Design, development, 3D modelling

- Charlie Gleason

- Support and Encouragement

- Glen Maddern

- Company

- Side project

- Timeline

- Early 2023 - December 2023

- Platform

- Web

- Industry

- Music

- Libraries

- Vite, React, Three.js, React Three Fiber

- Tools

- Blender, After Effects

When I decided to release new music (it's been seven years since the last Brightly record) I knew I wanted to build something with these bits and pieces I'd been noodling with. I knew I wasn't great at making traditional music videos, and I felt empowered by the flexibility of the browsers, and the creative opportunties afforded by using machine learning and AI. I think the result is something greater than the sum of its parts.

And there are a lot of parts.

(Also, if you haven't seen the video, you should go and check it out before I ruin the magic by shining a very bright light on exactly how it all works.)

(And if you'd rather check out a recording, you can jump over to YouTube to see a simplified version that should give you a pretty good idea.)

What is it?

Lysterfield Lake is a 3D generative interactive music video. It works in the browser, using Three.js and react-three-fiber to stitch together seven feeds of video frame by frame, turning a single piece of footage shot on an iPhone into a three-dimensional fever dream. It uses the accelerometer in your phone, if it's available, or your mouse if you're on a computer. It shows and hides the protagonist as you watch depending on your actions, offering a myriad of ways to experience it. New dreams can be added at any time.

Behind the scenes it was modelled in Blender, (using a polaroid model by Edoardo Galati), generated in python and shell scripts, and built in JavaScript. It is made up of entirely open-source projects that are freely available.

The process

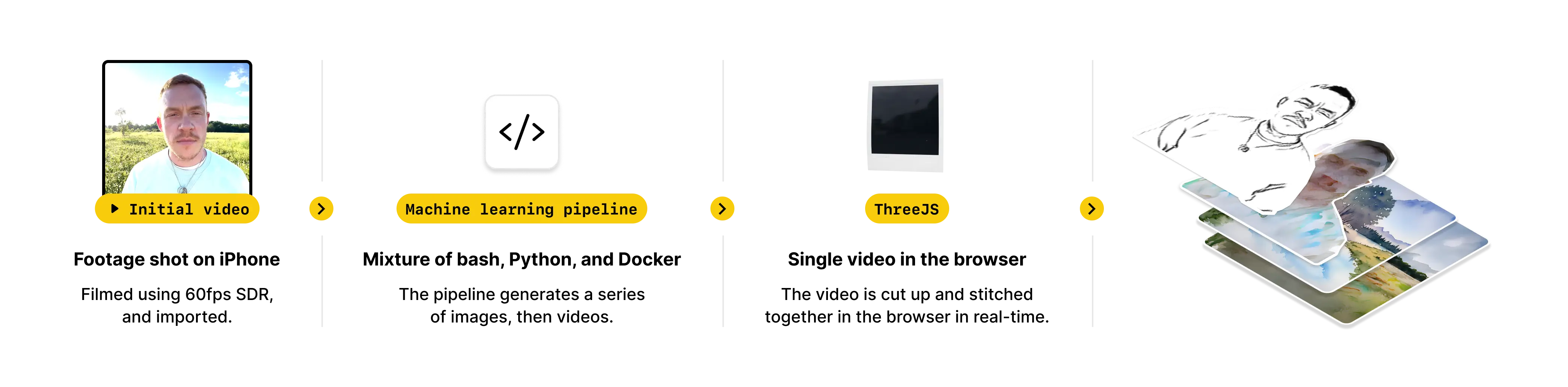

So, how does it work? I made a diagram to help.

First, a single video file, shot on an iPhone, is fed through a series of python and shell scripts. The initial one splits the video into individual frames. These frames then go through a bunch of processes depending on their final output.

Okay, how do I find out more?

If you'd like to check it out, you can do so here:

https://lysterfieldlake.com/

If you'd like to share this project, that would be greatly appreciated. The project itself is entirely open-source, and available at the following GitHub repositories:

- Client App - A React app, powered by React and react-three-fiber.

- Pipeline - The pipeline that generates the video used by the app, powered by python and shell-scripts.

(Author's note: Fair warning—it's not the cleanest code, and in the case of the pipeline, it's not something that will work locally out of the box. I definitely intended open-sourcing this to be educational, in the sense of "oh, that's how he did that!", as opposed to aspirational, like "oh, that's how he thinks React should be written??" It was a passion project, so maybe bear that in mind. Cheers.)

Thanks to all my mates who came on country walks while I filmed myself awkwardly mouthing along to my own music. To Ian and Georgia for filming the real thing in Scotland. And to everyone who told me I'm not too old to keep making things.

Also, this project wouldn't have happened, like all my projects, without the support and encouragement of Glen Maddern.

Produced and Mixed by James Seymour in Naarm.

Mastered by Andrei "Ony" Eremin in Philadelphia.

Thank you for listening.

@wearebrightly / wewerebrightly.com